In the bustling field of deep learning, the phrase “data is the new oil” is often bandied about. However, as a precision agriculture team working with millions of images, we’ve come to realize that not all data is created equal. Selecting the right datasets for training and testing models is as crucial as the models themselves. This blog post will describe an example approach of leveraging self-supervised learning with models such as SimCLR to sift through massive amounts of image data and identify those that are truly valuable.

Training deep learning models for precision agriculture applications like corn plant counting is a sophisticated process. Precision agriculture involves identifying, analyzing, and managing variability within fields for optimum profitability, sustainability, and protection of land resources. In a world facing increasing food demand and diminishing arable land, it’s paramount to leverage all available tools to optimize yields, and the role of deep learning cannot be understated.

A deep learning model is only as good as the data it is trained on. Just like how a diet rich in nutrients can contribute to a healthier individual, a quality dataset can significantly boost the performance and accuracy of a deep learning model. For a model to identify and count corn plants accurately, it must be trained on a diverse and high-quality set of agricultural images.

What defines the value of images?

When we discuss the “value” of images for deep learning training, we’re referring to a multifaceted concept encompassing several important characteristics. These include:

1. Relevance: A valuable image is relevant to the task at hand. For instance, in a deep learning model trained to identify corn plants, images containing corn fields would be relevant and valuable. This is an obvious observation but in a real-world scenario of automated image collection from a drone, a percentage of the collected images is bound to be irrelevant to the task at hand.

2. Diversity: Valuable images encompass a wide variety of scenarios. They should include different lighting conditions, environments, and contexts that the model could encounter in real-world applications. This helps to ensure that the model generalizes well and doesn’t simply overfit to a narrow range of conditions seen in the training data.

3. Quality: High-resolution, clear, and noise-free images are more valuable for training as they provide precise and accurate information for the model to learn from. Blurry, low-resolution, or overly noisy images may lead to inaccuracies in the model’s learning. In a realistic scenario, more often than not, collected images are blurry and noisy and these are cases where the model needs to succeed on. Therefore, for our purposes, we could not be picky. As a rule of thumb, if a human can reasonably discern an object of interest, the model should too.

4. Informative Content: Images are considered valuable if they contain informative content from which the model can learn. For instance, an image that features corn plants at different growth stages would be more valuable than an image where all plants are at the same stage, as it offers more information to the model. This is true for applications with less homogeneity and agriculture fields are full of those.

5. Representative: Valuable images should be representative of the data distribution that the model will encounter when deployed. For example, if the model is to be used in different geographical areas and seasons, the training images should reflect this diversity.

6. Quantity: While the quality of data is paramount, having a large enough quantity of valuable images is also crucial. This ensures that the model is exposed to as many variations as possible, thus improving its ability to learn and generalize. Having a plethora of images in drone-based agriculture analysis is a double-edged sword. Multiple images with high diversity are necessary for the development of reliable models but at the same time, the annotation of images is costly.

By applying self-supervised learning techniques to assess the value of each image according to these factors, deep learning teams can ensure that the datasets used for training their models are as valuable and effective as possible.

Harnessing Self-Supervised Learning to Select Datasets

The sheer volume of data at our disposal poses a challenge: how can we identify the most useful images to annotate and train our models? This is where self-supervised learning models like SimCLR come into play.

SimCLR (Simple Framework for Contrastive Learning of Visual Representations) is a contrastive self-supervised learning algorithm. It is by no means the only one, but it is one of the more popular ones. It learns to recognize patterns and features in unlabeled datasets by identifying similarities and differences. This ability to sift through data and make sense of it without the need for explicit labels makes these models powerful tools for preselecting useful images.

In the initial phase, self-supervised learning models help us to understand what kind of images are present in our large database, by providing a high-dimensional representation of each image. This enables us to identify clusters of similar images and outliers. The identified clusters guide us in deciding which images are worth annotating for supervised learning. Image representatives from a wider breadth of scenarios, including different light conditions, growth stages, and plant health statuses, are often prioritized for labeling.

The Results: Higher F1-score with Fewer Images

By using this selective approach, we’ve managed to significantly improve the f1-score of our models, while reducing the amount of data needed. To showcase this, let’s take our corn plant counting application as an example.

Traditionally, training an accurate model to count corn plants would require several thousands of labeled images. The naive approach of selecting one image per field would result in several tens of thousands of images to be annotated. Even if part of the image is annotated, we are looking at hundreds of plants per image, and hundreds of thousands if not a few millions of plants needed to be annotated. With our selective approach, we’ve been able to reduce this number drastically, while simultaneously increasing the model’s f1 score and robustness.

Our models, trained on these selectively curated datasets, can now accurately count corn plants in various scenarios, from different locations around the world and under various lighting conditions. The models can distinguish between plants and weeds, and count the number of plants in a range of growth stages, and even crowded fields. This higher accuracy contributes to higher degrees of automation that drive precise farming decisions, thus leading to enhanced profitability and sustainability.

The Process

Our transition from a naive approach to a more sophisticated, self-supervised image selection process was a gradual yet transformative one. Here, we will lay out the key steps that formed this progression.

Step 1: Naive Image Selection

In the early stages of our work, the approach to image selection was somewhat rudimentary. With our naive method, images were selected randomly or based on a simple criterion, like the time of capture or the geographical location. The lack of a more nuanced approach meant that the diversity and quality of images in our training dataset were often not optimal. As a result, the models trained on these datasets performed sub-optimally, with lower-than-expected f1-score and robustness.

From a production standpoint, this meant that several of the product solutions we developed required an extra layer of human QA to verify that the delivered output would meet the customer’s expectations. For low-volume products this was acceptable, but as the demand increased and the delivery timeline of the product shrank, human QAing became a significant bottleneck.

Step 2: Recognizing the Need for a More Sophisticated Approach

After observing these shortcomings, it became clear that a more sophisticated approach to image selection was necessary. This marked the beginning of our journey towards self-supervised learning for image selection. The idea was to harness models like SimCLR to learn valuable features from our images without the need for explicit labels and use this information to guide our image selection process.

Step 3: Implementing Self-Supervised Learning

Our next step was to implement the SimCLR architecture and fit the self-supervised iterative training and testing processes into our existing deep-learning pipeline. Preparing the data, carefully selecting augmentation strategies, and visually evaluating the model performance, are all part of a successful pipeline.

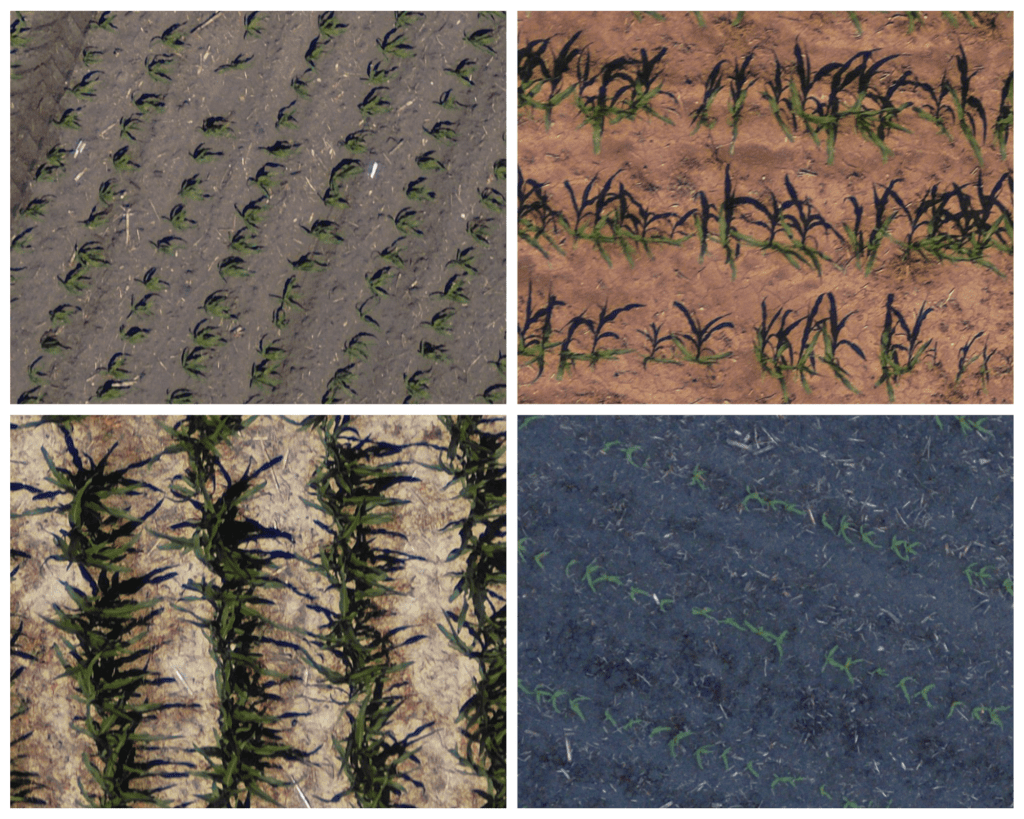

For the data preparation, it is essential to select the size and resolution of the images to train with. The example of plant counting from drone images requires the selection of images that contain the plants but also enough of the surrounding information that makes each image unique, such as the soil color, light conditions, etc. At the same time, self-supervised models work best with large batches and this imposes image size restrictions. A cropped area in the center of each image is a good compromise.

Selecting image augmentations is essentially the main point where domain-specific experience is required. The key is to select a set of augmentations that are relevant to the task at hand and reflect the kind of variations the model is expected to handle in its real-world application. For instance, in precision agriculture, useful augmentations might include changes in brightness to simulate different lighting conditions throughout the day, or rotations to steer the model off from clustering images with the same row direction together.

Once the models were trained, they provided us with high-dimensional embeddings for each image. These embeddings are essentially numerical representations that capture the essential features of the images.

Examples of images used to train the self-supervised model. These were generated by cropping and resizing larger images taken by Sentera sensors. A diverse set of soils, light conditions, and corn growth stages is important to capture all potential real world cases.

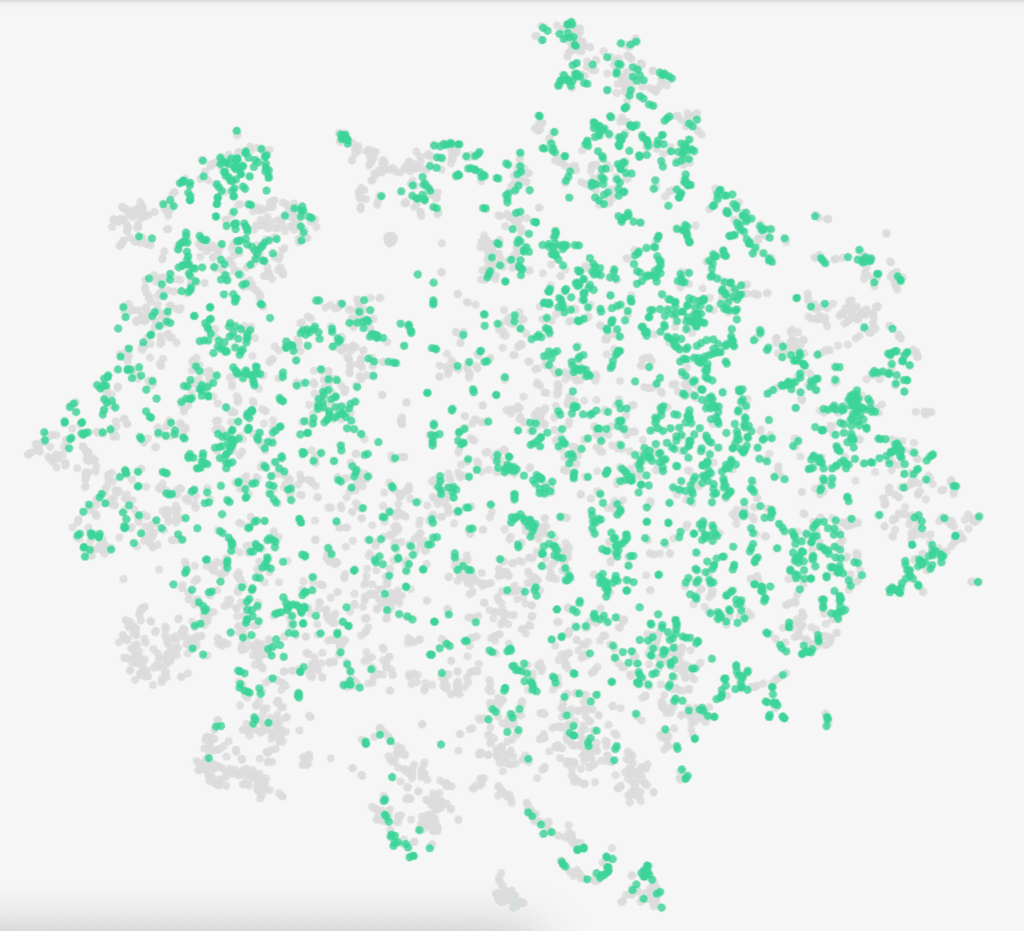

Step 4: Visualizing and Analyzing the Embeddings with Lightly

For the visualization of the embeddings, we utilized the Lightly platform which provided us with tools to visualize these high-dimensional embeddings in a way that is humanly interpretable. By using dimensionality reduction techniques, we were able to map these embeddings to a 2D or 3D space, where similar images cluster together. This capability, along with the filtering of images based on easy-to-compute image statistics (pixel value distribution, camera metadata, etc), gave us invaluable insights into the content of our images. We could identify clusters of similar images, spot unique or outlier images, and more importantly, understand the diversity and distribution of our data.

Low dimensional representation of the crop image embeddings. The green dots represent images passed some initial filtering steps. Out of those surviving the elimination step, some representatives are selected for human annotation. This method reduced the training set from over 50,000 candidate images to only 2,500.

Step 5: Guided Image Selection

With this newfound understanding, we could now select images more judiciously for our training datasets. For instance, we ensured that our dataset contained a good mix of images from each of the identified clusters, maximizing the diversity of the dataset. We also looked for outlier images, which often represent unique scenarios that a model needs to handle. This process resulted in a more balanced and representative dataset, leading to models with improved performance and robustness.

Conclusion: Looking to the Future

Our journey in precision agriculture applications underscores the critical importance of dataset selection. It’s not just about having vast amounts of data, but about having the right data. By using self-supervised learning models like SimCLR, we’ve been able to identify the most useful images out of millions, thus creating a smarter, more efficient pipeline for data curation.

This approach has far-reaching implications beyond precision agriculture. Any organization working with large image datasets can leverage these methods to optimize their training data selection. By placing emphasis on data quality over quantity, we can build more efficient, accurate, and reliable deep learning models.

This principle also aligns with our commitment to sustainability. By focusing on the quality of data rather than sheer volume, we reduce computational load and energy usage, aligning with global efforts to make AI greener and more sustainable.

To conclude, the saying that “not all data are created equal” rings true in our journey. By valuing the quality, diversity, and relevance of our data, we’ve managed to create deep learning models that are not just effective but also efficient and sustainable. Our experience reiterates that in the field of AI and deep learning, the right data can indeed make all the difference.